Training Course on Text Generation and Summarization with Deep Learning

Training Course on Text Generation & Summarization with Deep Learning introduces participants to the transformative power of Deep Learning in Natural Language Processing (NLP), specifically focusing on Text Generation and Text Summarization

Course Overview

Training Course on Text Generation & Summarization with Deep Learning

Introduction

Training Course on Text Generation & Summarization with Deep Learning introduces participants to the transformative power of Deep Learning in Natural Language Processing (NLP), specifically focusing on Text Generation and Text Summarization. In an era of exponential data growth, the ability to automatically create compelling content and distill vast information into concise, actionable insights is a critical skill for businesses and individuals alike. This program provides a comprehensive understanding of state-of-the-art architectures like Transformers (BERT, GPT) and their practical applications, empowering attendees to build sophisticated NLP models for various industry needs.

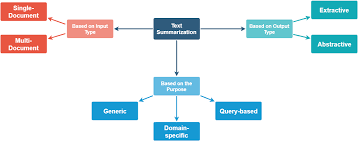

Leveraging the latest advancements in Generative AI and Neural Networks, this course moves beyond theoretical concepts to emphasize hands-on implementation and practical problem-solving. Participants will gain proficiency in techniques for generating coherent text, from creative content to structured reports, and master both extractive and abstractive summarization methods. By focusing on real-world case studies and industry best practices, this training equips professionals with the skills to automate content creation, enhance information retrieval, and unlock new efficiencies in diverse domains, from marketing and customer service to legal and financial analysis.

Course Duration

10 days

Course Objectives

- Understand core neural network architectures (RNNs, LSTMs, Transformers) for NLP.

- Implement Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) for creative text output.

- Develop models capable of generating novel, human-like summaries using Sequence-to-Sequence (Seq2Seq) and Attention Mechanisms.

- Build systems to identify and extract key sentences from large documents using BERT-based models and graph-based ranking.

- Effectively fine-tune and leverage Large Language Models (LLMs) like GPT-series and BART for specific text generation and summarization tasks.

- Critically assess the quality of generated and summarized text using metrics like ROUGE and BLEU.

- Apply techniques to improve the grammaticality and contextual flow of generated text.

- Learn how Transformer architecture overcomes limitations of traditional RNNs in processing lengthy documents.

- Adapt general models for industry-specific content generation and summarization.

- Implement Ethical AI Practices: Discuss and mitigate biases in AI-generated content and summarization.

- Explore strategies for hyperparameter tuning, transfer learning, and model deployment for efficiency.

- Understand how text generation and summarization tools can automate and enhance business processes.

- Grasp emerging concepts like multimodal summarization and explainable AI in NLP.

Organizational Benefits

- Significantly reduce manual effort in content creation, leading to faster content production and increased output (e.g., automated report generation, personalized marketing content, dynamic website content).

- Quickly distill vast amounts of textual data (e.g., customer feedback, research papers, legal documents) into actionable insights, improving decision-making and reducing information overload.

- Automate responses and generate concise summaries of customer interactions for faster support and personalized communication.

- Free up human resources from repetitive text-based tasks, allowing teams to focus on strategic initiatives and higher-value activities.

- Leverage cutting-edge AI capabilities to innovate products, services, and internal operations, staying ahead in the data-driven economy.

- Minimize operational costs associated with manual content creation, analysis, and summarization.

- Gain deeper insights from unstructured text data, enabling more informed and proactive business strategies.

Target Audience

- Data Scientists & Machine Learning Engineers.

- AI/NLP Researchers.

- Software Developers.

- Content Strategists & Marketers

- Business Analysts.

- Technical Writers & Journalists

- Product Managers text.

- Academics & Students

Course Outline

Module 1: Introduction to Deep Learning for NLP

- Overview of NLP and its challenges in the age of big data.

- Fundamentals of Neural Networks: perceptrons, activation functions, backpropagation.

- Introduction to Word Embeddings: Word2Vec, GloVe, FastText.

- Setting up the Deep Learning environment (TensorFlow/PyTorch).

- Case Study: Sentiment Analysis using basic neural networks on customer reviews.

Module 2: Recurrent Neural Networks (RNNs) & LSTMs

- Understanding sequence data and the need for RNNs.

- Long Short-Term Memory (LSTM) networks for capturing long-range dependencies.

- Gated Recurrent Units (GRUs) and their advantages.

- Bidirectional RNNs for enhanced contextual understanding.

- Case Study: Generating short creative sentences (e.g., poetry lines) using LSTMs.

Module 3: Advanced Architectures: Transformers & Attention Mechanisms

- The limitations of RNNs for very long sequences.

- Introduction to the Transformer architecture: self-attention, multi-head attention.

- Encoder-Decoder architecture in Transformers.

- Positional Encoding and its role.

- Case Study: Machine Translation of short phrases demonstrating attention in action.

Module 4: Pre-trained Language Models (PLMs)

- The concept of Transfer Learning in NLP.

- Understanding BERT (Bidirectional Encoder Representations from Transformers).

- Fine-tuning BERT for specific downstream tasks.

- Introduction to GPT (Generative Pre-trained Transformer) and its auto-regressive nature.

- Case Study: Classifying legal documents by fine-tuning BERT.

Module 5: Text Generation Fundamentals

- Defining text generation: challenges and applications.

- Decoding strategies: Greedy search, Beam search, Top-K, Nucleus sampling.

- Measuring creativity and diversity in generated text.

- Addressing repetition and incoherence in generated outputs.

- Case Study: Generating product descriptions for an e-commerce website with varying creativity levels.

Module 6: Advanced Text Generation with LLMs

- Leveraging GPT-series models for diverse text generation tasks.

- Prompt engineering: crafting effective prompts for desired outputs.

- Controlling text attributes: tone, style, length.

- Ethical considerations in large language model text generation.

- Case Study: Generating marketing copy for social media campaigns using GPT-3/4 based APIs.

Module 7: Extractive Text Summarization

- Introduction to extractive summarization techniques.

- Feature-based methods: TF-IDF, sentence scoring.

- Graph-based methods: TextRank, LexRank.

- Leveraging BERT for extractive summarization (e.g., BERTSUM).

- Case Study: Summarizing news articles by extracting key sentences.

Module 8: Abstractive Text Summarization

- Challenges of abstractive summarization vs. extractive.

- Sequence-to-Sequence (Seq2Seq) models with attention for summarization.

- Using Transformer-based models for abstractive summarization (e.g., BART, T5).

- Handling factual consistency and hallucination in abstractive summaries.

- Case Study: Generating concise meeting minutes from detailed transcripts.

Module 9: Evaluation of Text Generation & Summarization Models

- Quantitative metrics for text generation: Perplexity.

- Quantitative metrics for summarization: ROUGE (Recall-Oriented Understudy for Gisting Evaluation).

- BLEU (Bilingual Evaluation Understudy) for generation.

- Human evaluation and qualitative analysis.

- Case Study: Comparing different summarization models' performance on a dataset of scientific abstracts using ROUGE scores.

Module 10: Fine-tuning and Customization

- Strategies for fine-tuning pre-trained models on custom datasets.

- Data preparation and preprocessing for specific tasks.

- Transfer learning best practices and considerations.

- Domain adaptation for improved relevance.

- Case Study: Customizing a text generation model to produce medical reports based on clinical notes.

Module 11: Deployment and Scalability

- Strategies for deploying Deep Learning NLP models.

- API design for text generation and summarization services.

- Containerization (Docker) and orchestration (Kubernetes) for scalable deployment.

- Monitoring and maintaining deployed models.

- Case Study: Building a production-ready summarization API for a legal tech company.

Module 12: Ethical Considerations and Bias in NLP

- Identifying and mitigating bias in training data and model outputs.

- Fairness, accountability, and transparency in AI.

- Responsible use of text generation and summarization technologies.

- Addressing potential misuse of generated content (e.g., misinformation).

- Case Study: Analyzing and discussing gender bias in a pre-trained language model's generated text.

Module 13: Real-world Applications & Industry Insights

- Text generation in marketing, journalism, and creative writing.

- Summarization in finance, healthcare, and customer service.

- Chatbots and conversational AI powered by text generation.

- Legal tech: contract summarization and document generation.

- Case Study: How a financial institution uses automated summarization for market trend analysis from news feeds.

Module 14: Future Trends in Text Generation & Summarization

- Multimodal Text Generation (text + images/video).

- Controllable Text Generation (fine-grained control over output attributes).

- Parameter-Efficient Fine-Tuning (PEFT) techniques.

- The role of Reinforcement Learning in NLP.

- Case Study: Exploring research papers on generating descriptive captions for images.

Module 15: Capstone Project & Best Practices

- Participants work on a hands-on project applying learned techniques.

- Project planning, execution, and presentation.

- Best practices for developing robust and efficient NLP solutions.

- Troubleshooting common issues in Deep Learning for text.

- Case Study: Developing a custom solution for generating summaries of academic papers for a research institution.

Training Methodology

This course adopts a highly interactive and practical training methodology, combining theoretical lectures with extensive hands-on coding exercises, real-world case studies, and collaborative project work. Participants will engage in:

- Instructor-led sessions: Clear explanations of complex concepts with visual aids.

- Live coding demonstrations: Step-by-step implementation of models and algorithms.

- Hands-on labs: Practical exercises to reinforce learning and build proficiency.

- Case study analysis: In-depth examination of industry applications and challenges.

- Group discussions & problem-solving: Collaborative learning and knowledge sharing.

- Mini-projects: Application of learned skills to solve specific NLP problems.

- Q&A sessions: Dedicated time for addressing participant queries and challenges.

Register as a group from 3 participants for a Discount

Send us an email: info@datastatresearch.org or call +254724527104

Certification

Upon successful completion of this training, participants will be issued with a globally- recognized certificate.

Tailor-Made Course

We also offer tailor-made courses based on your needs.

Key Notes

a. The participant must be conversant with English.

b. Upon completion of training the participant will be issued with an Authorized Training Certificate

c. Course duration is flexible and the contents can be modified to fit any number of days.

d. The course fee includes facilitation training materials, 2 coffee breaks, buffet lunch and A Certificate upon successful completion of Training.

e. One-year post-training support Consultation and Coaching provided after the course.

f. Payment should be done at least a week before commence of the training, to DATASTAT CONSULTANCY LTD account, as indicated in the invoice so as to enable us prepare better for you.