Training Course on Cloud MLOps on GCP (Vertex AI Advanced)

Training Course on Cloud MLOps on GCP (Vertex AI Advanced): Deep Dive into GCP Services for MLOps provides a deep dive into Cloud MLOps on Google Cloud Platform (GCP), specifically leveraging Vertex AI Advanced capabilities. Participants will gain hands-on expertise in building, deploying, monitoring, and managing robust Machine Learning (ML) pipelines in a production environment.

Course Overview

Training Course on Cloud MLOps on GCP (Vertex AI Advanced): Deep Dive into GCP Services for MLOps

Introduction

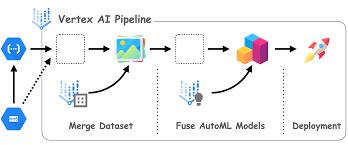

Training Course on Cloud MLOps on GCP (Vertex AI Advanced): Deep Dive into GCP Services for MLOps provides a deep dive into Cloud MLOps on Google Cloud Platform (GCP), specifically leveraging Vertex AI Advanced capabilities. Participants will gain hands-on expertise in building, deploying, monitoring, and managing robust Machine Learning (ML) pipelines in a production environment. The curriculum focuses on advanced techniques for ML model lifecycle management, automation, scalability, and governance within the GCP ecosystem, ensuring participants are equipped to operationalize AI at enterprise scale.

The course emphasizes practical application through real-world case studies and hands-on labs, covering key aspects like feature engineering, model versioning, CI/CD for ML, model monitoring, drift detection, and responsible AI. By mastering Vertex AI's comprehensive suite of tools, attendees will learn to optimize ML workflows, enhance model performance, and drive business value through efficient and reliable AI deployments on the cloud.

Course Duration

10 days

Course Objectives

- Master End-to-End MLOps Pipeline Automation on GCP using Vertex AI.

- Implement robust Data Versioning and Model Versioning strategies for reproducibility.

- Design and deploy scalable ML Training Workflows with Vertex AI Training and Custom Jobs.

- Leverage Vertex AI Feature Store for efficient feature management and serving.

- Develop and automate Continuous Integration (CI) and Continuous Delivery (CD) pipelines for ML models.

- Implement advanced Model Monitoring techniques for performance, drift, and anomaly detection.

- Configure and optimize Model Serving and Deployment strategies using Vertex AI Endpoints and online/batch prediction.

- Understand and apply Explainable AI (XAI) techniques with Vertex AI Explainable AI.

- Orchestrate complex ML Workflows using Vertex AI Pipelines (Kubeflow Pipelines on GCP).

- Ensure Responsible AI practices including fairness, bias detection, and transparency in MLOps.

- Optimize Resource Management and cost efficiency for ML workloads on GCP.

- Troubleshoot and debug Production ML Systems effectively.

- Integrate Security and Governance best practices into MLOps workflows.

Organizational Benefits

- Significantly reduce time-to-market for new AI capabilities.

- Ensure consistent, high-performing ML models in production.

- Automate repetitive tasks, freeing up data scientists and ML engineers.

- Foster seamless teamwork with versioned and auditable ML workflows.

- Efficiently manage growing ML workloads and control infrastructure costs on GCP.

- Proactively detect and mitigate model degradation and data drift.

- Empower organizations with timely and accurate AI-powered insights.

- Establish clear audit trails and adherence to regulatory requirements for ML systems.

Target Audience

- Machine Learning Engineers

- Data Scientists

- DevOps Engineers

- Cloud Architects

- MLOps Practitioners

- AI/ML Team Leads and Managers

- Software Engineers

- Anyone involved in deploying and managing ML models on GCP

Course Outline

Module 1: Introduction to MLOps and GCP Ecosystem

- MLOps Fundamentals: Understanding the ML lifecycle, challenges, and the need for MLOps.

- GCP for MLOps Overview: Introduction to key GCP services for ML.

- Vertex AI: Unified ML Platform: Exploring Vertex AI's capabilities across the ML lifecycle.

- MLOps Maturity Model: Assessing organizational MLOps readiness.

- Case Study: Early-stage startup struggling with inconsistent model deployments and how MLOps principles could help.

Module 2: Data Management and Feature Engineering with GCP

- Data Ingestion and Storage: Cloud Storage, BigQuery, and Dataflow for large-scale data.

- Data Versioning with DVC/LakeFS on GCP: Managing datasets for reproducibility.

- Vertex AI Feature Store: Building, managing, and serving features at scale.

- Feature Engineering Pipelines: Automating feature creation and transformation.

- Case Study: A retail company using Vertex AI Feature Store to manage customer behavior features for personalized recommendations.

Module 3: Model Development and Experiment Tracking

- Vertex AI Workbench and Notebooks: Collaborative development environments.

- Custom Training in Vertex AI: Running training jobs with custom code and containers.

- Experiment Tracking with Vertex AI Experiments: Logging metrics, parameters, and artifacts.

- Hyperparameter Tuning with Vertex AI Vizier: Optimizing model performance automatically.

- Case Study: A financial institution using Vertex AI Experiments to track hundreds of fraud detection model variations and identify the best performers.

Module 4: Model Versioning and Registration

- Vertex AI Model Registry: Centralized repository for managing ML models.

- Model Versioning Best Practices: Tracking model lineage and artifacts.

- Model Metadata Management: Storing essential information about models.

- Model Governance and Approval Workflows: Establishing processes for model promotion.

- Case Study: A healthcare provider managing different versions of disease prediction models in Vertex AI Model Registry for auditability and regulatory compliance.

Module 5: Building ML Pipelines with Vertex AI Pipelines

- Introduction to Kubeflow Pipelines: Understanding directed acyclic graphs (DAGs) for ML workflows.

- Vertex AI Pipelines Components: Creating reusable and modular pipeline components.

- Orchestrating ML Workflows: Defining and running complex pipelines.

- Parameterizing and Scheduling Pipelines: Automating pipeline execution.

- Case Study: An e-commerce company building a Vertex AI Pipeline for automated new product recommendation model training and deployment.

Module 6: CI/CD for Machine Learning

- Integrating Git with GCP: Source control management for ML code.

- Cloud Build for ML CI/CD: Automating build, test, and deployment of ML artifacts.

- Triggering Pipelines: Continuous integration for data and code changes.

- Automated Model Testing and Validation: Ensuring model quality before deployment.

- Case Study: A logistics company implementing CI/CD with Cloud Build to ensure continuous updates to their delivery route optimization models.

Module 7: Model Deployment and Serving

- Vertex AI Endpoints for Online Prediction: Deploying models for real-time inference.

- Batch Prediction with Vertex AI Batch Prediction: Processing large datasets offline.

- Custom Containers for Model Serving: Packaging models with custom logic.

- Model Scaling and Load Balancing: Handling high inference traffic.

- Case Study: A media company deploying a content recommendation model using Vertex AI Endpoints to serve millions of real-time requests.

Module 8: Advanced Model Monitoring and Alerting

- Data Drift Detection: Identifying changes in input data distributions.

- Concept Drift Detection: Monitoring changes in the relationship between input and target variables.

- Model Performance Monitoring: Tracking key metrics (accuracy, precision, recall, F1-score).

- Vertex AI Model Monitoring: Setting up automated monitoring and alerts.

- Case Study: A fraud detection system using Vertex AI Model Monitoring to detect shifts in transaction patterns, triggering retraining when data drift is significant.

Module 9: Model Retraining and Automation

- Automated Retraining Strategies: Triggering retraining based on performance degradation or data drift.

- Retraining Pipelines: Integrating retraining into the MLOps workflow.

- Model Rollback and Version Management: Safely deploying new model versions and reverting.

- A/B Testing for Models: Comparing different model versions in production.

- Case Study: An advertising platform automating the retraining of its click-through rate prediction model based on observed concept drift, improving ad targeting.

Module 10: Explainable AI (XAI) on GCP

- Importance of Model Interpretability: Understanding model predictions.

- Vertex AI Explainable AI: Using integrated tools for explanations.

- Feature Attributions (SHAP, LIME): Identifying important features for predictions.

- Visualizing Explanations: Interpreting model behavior.

- Case Study: A credit scoring agency using Vertex AI Explainable AI to provide transparent explanations for loan approval decisions to customers and regulators.

Module 11: Responsible AI and MLOps Governance

- Fairness and Bias Detection: Identifying and mitigating biases in ML models.

- Data Governance and Privacy: Ensuring ethical data usage in MLOps.

- Security in MLOps Pipelines: Protecting ML assets and data.

- Auditability and Compliance: Establishing clear records of ML model changes.

- Case Study: A human resources firm implementing Responsible AI practices within their MLOps pipeline on GCP to ensure fairness in resume screening models.

Module 12: MLOps Cost Optimization and Resource Management

- Optimizing Compute Resources: Understanding GPUs, TPUs, and custom machine types.

- Cost Monitoring and Budgeting: Tracking GCP MLOps expenditures.

- Resource Allocation Strategies: Efficiently managing resources for training and inference.

- Serverless MLOps Components: Leveraging Cloud Functions and Cloud Run for cost savings.

- Case Study: A geospatial analytics company optimizing their large-scale image processing ML workflows on GCP to reduce compute costs significantly.

Module 13: Troubleshooting and Debugging Production ML Systems

- Logging and Monitoring with Cloud Logging and Cloud Monitoring: Centralized insights.

- Debugging ML Pipelines: Identifying issues in complex workflows.

- Alerting and Incident Response: Setting up notifications for production anomalies.

- Best Practices for Production Readiness: Checklists and common pitfalls.

- Case Study: An IoT company troubleshooting performance degradation in their predictive maintenance models using Cloud Logging and Monitoring.

Module 14: Advanced MLOps Patterns and Architectures

- Multi-Cloud MLOps Considerations: Brief overview of hybrid approaches.

- Real-time MLOps Architectures: Designing low-latency inference systems.

- Federated Learning and Edge MLOps: Emerging trends.

- MLOps with Large Language Models (LLMs): Operationalizing generative AI.

- Case Study: A smart city initiative exploring edge MLOps for real-time traffic analysis on resource-constrained devices.

Module 15: Capstone Project and Future Trends

- End-to-End MLOps Project: Participants apply learned concepts to a comprehensive project.

- Best Practices Review: Consolidating key MLOps principles.

- Emerging MLOps Tools and Technologies: Staying ahead of the curve.

- Certification Pathways: Guiding participants towards official GCP MLOps certifications.

- Case Study: Participants developing and deploying a sentiment analysis model for customer feedback, showcasing a complete MLOps lifecycle on Vertex AI.